Oracle 19c (19.6.0) - Zero-Downtime Oracle Grid Infrastructure Patching (ZDOGIP) Feature

This document provides procedures to assist with patching grid infrastructure with Zero Impact on Database .

This is a new feature introduced in the Oracle 19c (19.6) RU. The minimum source version must be at least 19.6

1. Two Node RAC with Oracle Linux 8.0, Oracle 19c GI (19.6.0) and Oracle 19c (19.6.0) Database.

2. Oracle 19c GI (19.6.0) running on Oracle Linux 8.0 with no ACFS/AFD configured.

a. Existing GI Home: /u01/app/19.3.0/grid with applied Oracle 19c (19.6.0) RU

b. Existing RDBMS Home: /u01/app/oracle/product/19.3.0/db_1 with applied Oracle 19c (19.6.0) RU

3. Install Oracle 19c GI (19.3.0) and apply Oracle 19c (19.7.0) RU in a separate GI Home

a. New GI Home: /u01/app/19.7.0/grid

b. ./gridSetup.sh -applyPSU /home/oracle/30899722

c. Chose the option "Install Software only" and select all the nodes.

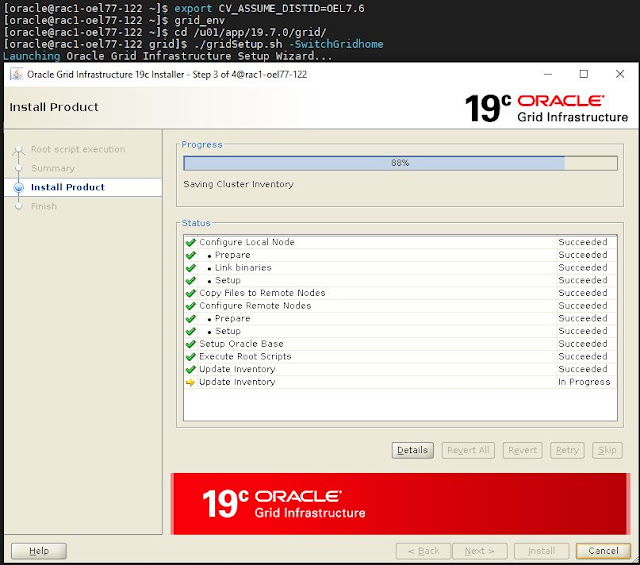

4. Switching the Grid Infrastructure Home

a. Run the gridSetup.sh from the target home

b. /u01/app/19.7.0/grid/gridSetup.sh -SwitchGridhome5. Execute the following script in all cluster nodes (rac1-oel77-122 & rac2-oel77-123)

a. /u01/app/19.7.0/grid/root.sh -transparent -nodriverupdate

6. CRS status after patching.

7. Finally Oracle GI will be Oracle 19c (19.7.0) and Oracle RDBMS will be Oracle 19c (19.6.0)

Introduction:

This document provides procedures to assist with patching grid infrastructure with Zero Impact on Database .

This is a new feature introduced in the 19.6 RU. The minimum source version must be at least 19.6

NOTE: This procedure is only valid for systems that are NOT using Grid Infrastructure OS drivers (AFD, ACFS, ADVM).

If GI Drivers are in use, the database instance running on the node being updated will need to be stopped

and restarted. In this case, you must rely on rolling patch installation.

Please refer for more details:

Zero-Downtime Oracle Grid Infrastructure Patching (ZDOGIP). (Doc ID 2635015.1)

Step-1: Oracle 19c GI (19.6.0) running on Oracle Linux 8.0 with no ACFS/AFD configured.

[oracle@rac1-oel77-122 ~]$ grid_env

[oracle@rac1-oel77-122 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE rac1-oel77-122 STABLE

ONLINE ONLINE rac2-oel77-123 STABLE

ora.chad

ONLINE ONLINE rac1-oel77-122 STABLE

ONLINE ONLINE rac2-oel77-123 STABLE

ora.net1.network

ONLINE ONLINE rac1-oel77-122 STABLE

ONLINE ONLINE rac2-oel77-123 STABLE

ora.ons

ONLINE ONLINE rac1-oel77-122 STABLE

ONLINE ONLINE rac2-oel77-123 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 STABLE

2 ONLINE ONLINE rac2-oel77-123 STABLE

3 OFFLINE OFFLINE STABLE

ora.DATADG.dg(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 STABLE

2 ONLINE ONLINE rac2-oel77-123 STABLE

3 ONLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac2-oel77-123 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.MGMTLSNR

1 ONLINE ONLINE rac1-oel77-122 169.254.18.68 10.1.4

.122,STABLE

ora.OCRVD.dg(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 STABLE

2 ONLINE ONLINE rac2-oel77-123 STABLE

3 OFFLINE OFFLINE STABLE

ora.RECODG.dg(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 STABLE

2 ONLINE ONLINE rac2-oel77-123 STABLE

3 ONLINE OFFLINE STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 STABLE

2 ONLINE ONLINE rac2-oel77-123 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 STABLE

2 ONLINE ONLINE rac2-oel77-123 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.mgmtdb

1 ONLINE ONLINE rac1-oel77-122 Open,STABLE

ora.orcldb.db

1 ONLINE ONLINE rac1-oel77-122 Open,HOME=/u01/app/o

racle/product/19.3.0

/db_1,STABLE

2 ONLINE ONLINE rac2-oel77-123 Open,HOME=/u01/app/o

racle/product/19.3.0

/db_1,STABLE

ora.qosmserver

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.rac1-oel77-122.vip

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.rac2-oel77-123.vip

1 ONLINE ONLINE rac2-oel77-123 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac2-oel77-123 STABLE

ora.scan2.vip

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.scan3.vip

1 ONLINE ONLINE rac1-oel77-122 STABLE

--------------------------------------------------------------------------------

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ sqlplus / as sysasm

SQL*Plus: Release 19.0.0.0.0 - Production on Sat Aug 1 11:49:28 2020

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.6.0.0.0

SQL> select instance_name,instance_number from gv$instance;

INSTANCE_NAME INSTANCE_NUMBER

---------------- ---------------

+ASM1 1

+ASM2 2

SQL> exit

Disconnected from Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.6.0.0.0

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ db_env

[oracle@rac1-oel77-122 ~]$ srvctl status database -d orcldb

Instance orcldb1 is running on node rac1-oel77-122

Instance orcldb2 is running on node rac2-oel77-123

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ sqlplus sys@orcldb as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Sat Aug 1 11:50:19 2020

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle. All rights reserved.

Enter password:

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.6.0.0.0

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB1 READ WRITE NO

4 PDB2 READ WRITE NO

SQL> select instance_name,instance_number from gv$instance;

INSTANCE_NAME INSTANCE_NUMBER

---------------- ---------------

orcldb2 2

orcldb1 1

SQL>

Step-2: Install Oracle 19c GI (19.3.0) and apply Oracle 19c (19.7.0) RU in a separate GI Home

a. New GI Home: /u01/app/19.7.0/grid

b. Chose the option "Install Software only" and select all the node.

[root@rac1-oel77-122 ~]# cd /u01/app/

[root@rac1-oel77-122 app]# mkdir -p 19.7.0/grid

[root@rac1-oel77-122 app]# chown -R oracle:oinstall 19.7.0

[root@rac1-oel77-122 app]# chown -R oracle:oinstall 19.7.0/grid/

[root@rac1-oel77-122 app]#

Note: Repeat same steps in other cluster nodes.

[oracle@rac1-oel77-122 grid]$ unzip LINUX.X64_193000_grid_home.zip

Archive: LINUX.X64_193000_grid_home.zip

creating: instantclient/

inflating: instantclient/libsqlplusic.so

creating: opmn/

creating: opmn/logs/

creating: opmn/conf/

inflating: opmn/conf/ons.config

[oracle@rac1-oel77-122 ~]$ unzip p6880880_190000_Linux-x86-64.zip -d /u01/app/19.7.0/grid/

Archive: p6880880_190000_Linux-x86-64.zip

replace /u01/app/19.7.0/grid/OPatch/emdpatch.pl? [y]es, [n]o, [A]ll, [N]one, [r]ename: A

inflating: /u01/app/19.7.0/grid/OPatch/emdpatch.pl

inflating: /u01/app/19.7.0/grid/OPatch/oracle_common/modules/com.oracle.glcm.common-logging_1.6.5.0.jar

inflating: /u01/app/19.7.0/grid/OPatch/oplan/oplan

inflating: /u01/app/19.7.0/grid/OPatch/datapatch

[oracle@rac1-oel77-122 ~]$ chmod u+x p30899722_190000_Linux-x86-64.zip

[oracle@rac1-oel77-122 ~]$ unzip p30899722_190000_Linux-x86-64.zip

Archive: p30899722_190000_Linux-x86-64.zip

creating: 30899722/

creating: 30899722/30869156/

inflating: 30899722/30869156/README.txt

inflating: 30899722/30869156/README.html

creating: 30899722/30869156/etc/

[oracle@rac1-oel77-122 ~]$ grid_env

[oracle@rac1-oel77-122 ~]$ cd /u01/app/19.7.0/grid/

[oracle@rac1-oel77-122 grid]$ ls

addnode crs deinstall evm install jdk network ord perl racg root.sh.old slax tomcat wlm

assistants css demo gpnp instantclient jlib nls ords plsql rdbms root.sh.old.1 sqlpatch ucp wwg

bin cv diagnostics gridSetup.sh inventory ldap OPatch oss precomp relnotes rootupgrade.sh sqlplus usm xag

cha dbjava dmu has javavm lib opmn oui QOpatch rhp runcluvfy.sh srvm utl xdk

clone dbs env.ora hs jdbc md oracore owm qos root.sh sdk suptools welcome.html

[oracle@rac1-oel77-122 ~]$ export CV_ASSUME_DISTID=OEL7.6

[oracle@rac1-oel77-122 ~]$ grid_env

[oracle@rac1-oel77-122 ~]$ cd /u01/app/19.7.0/grid/[oracle@rac1-oel77-122 grid]$ ./gridSetup.sh -applyPSU /home/oracle/30899722

Preparing the home to patch...

Applying the patch /home/oracle/30899722...

Successfully applied the patch.

The log can be found at: /u01/app/oraInventory/logs/GridSetupActions2020-08-01_11-09-34AM/installerPatchActions_2020-08-01_11-09-34AM.log

Launching Oracle Grid Infrastructure Setup Wizard...

The response file for this session can be found at:

/u01/app/19.7.0/grid/install/response/grid_2020-08-01_11-09-34AM.rsp

You can find the log of this install session at:

/u01/app/oraInventory/logs/GridSetupActions2020-08-01_11-09-34AM/gridSetupActions2020-08-01_11-09-34AM.log

[oracle@rac1-oel77-122 grid]$

[root@rac1-oel77-122 ~]# /u01/app/19.7.0/grid/root.sh

Performing root user operation.

ORACLE_OWNER= oracle

The following environment variables are set as:

Enter the full pathname of the local bin directory: [/usr/local/bin]:

ORACLE_HOME= /u01/app/19.7.0/grid

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "dbhome" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

The contents of "coraenv" have not changed. No need to overwrite.

Database Configuration Assistant when a database is created

To configure Grid Infrastructure for a Cluster execute the following command as oracle user:

Finished running generic part of root script.

Now product-specific root actions will be performed.

/u01/app/19.7.0/grid/gridSetup.sh

This command launches the Grid Infrastructure Setup Wizard. The wizard also supports

silent operation, and the parameters can be passed through the response file that

is available in the installation media.

[root@rac1-oel77-122 ~]#

[root@rac2-oel77-123 ~]# /u01/app/19.7.0/grid/root.sh

Performing root user operation.

ORACLE_OWNER= oracle

The following environment variables are set as:

Enter the full pathname of the local bin directory: [/usr/local/bin]:

ORACLE_HOME= /u01/app/19.7.0/grid

Finished running generic part of root script.

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

This command launches the Grid Infrastructure Setup Wizard. The wizard also supports

silent operation, and the parameters can be passed through the response file that is

available in the installation media.

Now product-specific root actions will be performed.

To configure Grid Infrastructure for a Cluster execute the following command as oracle user:

/u01/app/19.7.0/grid/gridSetup.sh

[root@rac2-oel77-123 ~]#

Step-4: Switching the Grid Infrastructure Home

a. Run the gridSetup.sh from the target home

b. /u01/app/19.7.0/grid/gridSetup.sh -SwitchGridhome

c. Execute the following script in all cluster nodes (rac1-oel77-122 & rac2-oel77-123)

/u01/app/19.7.0/grid/root.sh -transparent -nodriverupdate

Executing 'root.sh' in rac1-oel77-122

======================================

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 9032 7386 0 11:56 pts/3 00:00:00 grep --color=auto pmon

oracle 12184 1 0 Jul31 ? 00:00:01 asm_pmon_+ASM1

oracle 15010 1 0 01:25 ? 00:00:01 ora_pmon_orcldb1

oracle 17470 1 0 00:16 ? 00:00:01 mdb_pmon_-MGMTDB

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ su - root

Password:

[root@rac1-oel77-122 ~]# /u01/app/19.7.0/grid/root.sh -transparent -nodriverupdate

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/19.7.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

LD_LIBRARY_PATH='/u01/app/19.3.0/grid/lib:/u01/app/19.7.0/grid/lib:'

Using configuration parameter file: /u01/app/19.7.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/oracle/crsdata/rac1-oel77-122/crsconfig/rootcrs_rac1-oel77-122_2020-08-01_12-06-52AM.log

Using configuration parameter file: /u01/app/19.7.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/oracle/crsdata/rac1-oel77-122/crsconfig/rootcrs_rac1-oel77-122_2020-08-01_12-06-52AM.log

Using configuration parameter file: /u01/app/19.7.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/oracle/crsdata/rac1-oel77-122/crsconfig/crs_prepatch_rac1-oel77-122_2020-08-01_12-06-53AM.log

Using configuration parameter file: /u01/app/19.7.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/oracle/crsdata/rac1-oel77-122/crsconfig/crs_prepatch_rac1-oel77-122_2020-08-01_12-06-53AM.log

2020/08/01 12:07:10 CLSRSC-347: Successfully unlock /u01/app/19.7.0/grid

2020/08/01 12:07:12 CLSRSC-671: Pre-patch steps for patching GI home successfully completed.

Using configuration parameter file: /u01/app/19.7.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/oracle/crsdata/rac1-oel77-122/crsconfig/crs_postpatch_rac1-oel77-122_2020-08-01_12-07-12AM.log

Oracle Clusterware active version on the cluster is [19.0.0.0.0]. The cluster upgrade state is [NORMAL]. The cluster active patch level is [2701864972].

2020/08/01 12:07:32 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd_dummy.service'

2020/08/01 12:12:07 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.service'

Oracle Clusterware active version on the cluster is [19.0.0.0.0]. The cluster upgrade state is [ROLLING PATCH]. The cluster active patch level is [2701864972].

2020/08/01 12:13:14 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector.

2020/08/01 12:13:15 CLSRSC-672: Post-patch steps for patching GI home successfully completed.

[root@rac1-oel77-122 ~]#

Checking the status "Database Instance (orcldb1)"

=================================================

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 10672 10982 0 11:57 pts/2 00:00:00 grep --color=auto pmon

oracle 12184 1 0 Jul31 ? 00:00:01 asm_pmon_+ASM1

oracle 15010 1 0 01:25 ? 00:00:01 ora_pmon_orcldb1

oracle 17470 1 0 00:16 ? 00:00:01 mdb_pmon_-MGMTDB

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ ps -ef | grep d.bin

oracle 2401 2388 0 11:54 pts/1 00:00:00 /u01/app/19.7.0/grid/perl/bin/perl -I/u01/app/19.7.0/grid/perl/lib -I/u01/app/19.7.0/grid/bin /u01/app/19.7.0/grid/bin/gridSetup.pl -J-DCVU_OS_SETTINGS=SHELL_NOFILE_SOFT_LIMIT:1024,SHELL_STACK_SOFT_LIMIT:10240,SHELL_UMASK:0022 -SwitchGridhome

root 10768 1 0 Jul31 ? 00:05:28 /u01/app/19.3.0/grid/bin/ohasd.bin reboot BLOCKING_STACK_LOCALE_OHAS=AMERICAN_AMERICA.AL32UTF8

root 10871 1 0 Jul31 ? 00:02:03 /u01/app/19.3.0/grid/bin/orarootagent.bin

oracle 10956 1 0 Jul31 ? 00:03:10 /u01/app/19.3.0/grid/bin/oraagent.bin

oracle 10983 1 0 Jul31 ? 00:01:07 /u01/app/19.3.0/grid/bin/mdnsd.bin

oracle 10985 1 0 Jul31 ? 00:02:41 /u01/app/19.3.0/grid/bin/evmd.bin

oracle 11017 1 0 Jul31 ? 00:01:11 /u01/app/19.3.0/grid/bin/gpnpd.bin

oracle 11070 10985 0 Jul31 ? 00:01:00 /u01/app/19.3.0/grid/bin/evmlogger.bin -o /u01/app/19.3.0/grid/log/[HOSTNAME]/evmd/evmlogger.info -l /u01/app/19.3.0/grid/log/[HOSTNAME]/evmd/evmlogger.log

oracle 11087 1 0 Jul31 ? 00:02:30 /u01/app/19.3.0/grid/bin/gipcd.bin

root 11135 1 0 Jul31 ? 00:01:18 /u01/app/19.3.0/grid/bin/cssdmonitor

root 11138 1 1 Jul31 ? 00:08:46 /u01/app/19.3.0/grid/bin/osysmond.bin

root 11168 1 0 Jul31 ? 00:01:30 /u01/app/19.3.0/grid/bin/cssdagent

oracle 11186 1 0 Jul31 ? 00:05:15 /u01/app/19.3.0/grid/bin/ocssd.bin -S 1

root 11788 1 1 Jul31 ? 00:09:23 /u01/app/19.3.0/grid/bin/ologgerd -M

root 11856 1 0 Jul31 ? 00:02:47 /u01/app/19.3.0/grid/bin/octssd.bin reboot

root 12409 1 0 Jul31 ? 00:05:50 /u01/app/19.3.0/grid/bin/crsd.bin reboot

root 13478 1 1 Jul31 ? 00:08:33 /u01/app/19.3.0/grid/bin/orarootagent.bin

oracle 13491 1 1 Jul31 ? 00:08:48 /u01/app/19.3.0/grid/bin/oraagent.bin

oracle 13529 1 0 Jul31 ? 00:00:09 /u01/app/19.3.0/grid/bin/tnslsnr ASMNET1LSNR_ASM -no_crs_notify -inherit

root 13965 11138 0 Jul31 ? 00:00:42 /u01/app/19.3.0/grid/perl/bin/perl /u01/app/19.3.0/grid/bin/diagsnap.pl start

oracle 14621 1 0 Jul31 ? 00:00:00 /u01/app/19.3.0/grid/bin/tnslsnr LISTENER_SCAN2 -no_crs_notify -inherit

oracle 14665 1 0 Jul31 ? 00:00:00 /u01/app/19.3.0/grid/bin/tnslsnr LISTENER_SCAN3 -no_crs_notify -inherit

oracle 14748 1 0 Jul31 ? 00:01:12 /u01/app/19.3.0/grid/bin/scriptagent.bin

oracle 16894 1 0 Jul31 ? 00:00:00 /u01/app/19.3.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit

oracle 17344 1 0 00:16 ? 00:00:02 /u01/app/19.3.0/grid/bin/tnslsnr MGMTLSNR -no_crs_notify -inherit

oracle 19147 19122 0 12:06 ? 00:00:00 /bin/sh /u01/app/19.7.0/grid/bin/orald -o /u01/app/19.7.0/grid/rdbms/lib/oracle -m64 -z noexecstack -Wl,--disable-new-dtags -L/u01/app/19.7.0/grid/rdbms/lib/ -L/u01/app/19.7.0/grid/lib/ -L/u01/app/19.7.0/grid/lib/stubs/ -Wl,-E /u01/app/19.7.0/grid/rdbms/lib/opimai.o /u01/app/19.7.0/grid/rdbms/lib/ssoraed.o /u01/app/19.7.0/grid/rdbms/lib/ttcsoi.o -Wl,--whole-archive -lperfsrv19 -Wl,--no-whole-archive /u01/app/19.7.0/grid/lib/nautab.o /u01/app/19.7.0/grid/lib/naeet.o /u01/app/19.7.0/grid/lib/naect.o /u01/app/19.7.0/grid/lib/naedhs.o /u01/app/19.7.0/grid/rdbms/lib/config.o -ldmext -lserver19 -lodm19 -lofs -lcell19 -lnnet19 -lskgxp19 -lsnls19 -lnls19 -lcore19 -lsnls19 -lnls19 -lcore19 -lsnls19 -lnls19 -lxml19 -lcore19 -lunls19 -lsnls19 -lnls19 -lcore19 -lnls19 -lclient19 -lvsnst19 -lcommon19 -lgeneric19 -lknlopt -loraolap19 -lskjcx19 -lslax19 -lpls19 -lrt -lplp19 -ldmext -lserver19 -lclient19 -lvsnst19 -lcommon19 -lgeneric19 -lavstub19 -lknlopt -lslax19 -lpls19 -lrt -lplp19 -ljavavm19 -lserver19 -lwwg -lnbeq19 -lntmq19 -lnhost19 -lnus19 -lnldap19 -lldapclnt19 -lngsmshd19 -lntcp19 -lntcps19 -lntcp19 -lntns19 -lntwss19 -lncrypt19 -lnsgr19 -lnzjs19 -ln19 -lnl19 -lngsmshd19 -lnro19 -lnbeq19 -lntmq19 -lnhost19 -lnus19 -lnldap19 -lldapclnt19 -lngsmshd19 -lntcp19 -lntcps19 -lntcp19 -lntns19 -lntwss19 -lncrypt19 -lnsgr19 -lnzjs19 -ln19 -lnl19 -lngsmshd19 -lnnzst19 -lzt19 -lztkg19 -lmm -lsnls19 -lnls19 -lcore19 -lsnls19 -lnls19 -lcore19 -lsnls19 -lnls19 -lxml19 -lcore19 -lunls19 -lsnls19 -lnls19 -lcore19 -lnls19 -lztkg19 -lnbeq19 -lntmq19 -lnhost19 -lnus19 -lnldap19 -lldapclnt19 -lngsmshd19 -lntcp19 -lntcps19 -lntcp19 -lntns19 -lntwss19 -lncrypt19 -lnsgr19 -lnzjs19 -ln19 -lnl19 -lngsmshd19 -lnro19 -lnbeq19 -lntmq19 -lnhost19 -lnus19 -lnldap19 -lldapclnt19 -lngsmshd19 -lntcp19 -lntcps19 -lntcp19 -lntns19 -lntwss19 -lncrypt19 -lnsgr19 -lnzjs19 -ln19 -lnl19 -lngsmshd19 -lnnzst19 -lzt19 -lztkg19 -lsnls19 -lnls19 -lcore19 -lsnls19 -lnls19 -lcore19 -lsnls19 -lnls19 -lxml19 -lcore19 -lunls19 -lsnls19 -lnls19 -lcore19 -lnls19 -lordsdo19 -lserver19 -L/u01/app/19.7.0/grid/ctx/lib/ -lctxc19 -lctx19 -lzx19 -lgx19 -lctx19 -lzx19 -lgx19 -lclscest19 -loevm -lclsra19 -ldbcfg19 -lhasgen19 -lskgxn2 -lnnzst19 -lzt19 -lxml19 -lgeneric19 -locr19 -locrb19 -locrutl19 -lhasgen19 -lskgxn2 -lnnzst19 -lzt19 -lxml19 -lgeneric19 -lgeneric19 -lorazip -loraz -llzopro5 -lorabz2 -lorazstd -loralz4 -lipp_z -lipp_bz2 -lippdc -lipps -lippcore -lippcp -lsnls19 -lnls19 -lcore19 -lsnls19 -lnls19 -lcore19 -lsnls19 -lnls19 -lxml19 -lcore19 -lunls19 -lsnls19 -lnls19 -lcore19 -lnls19 -lsnls19 -lunls19 -lsnls19 -lnls19 -lcore19 -lsnls19 -lnls19 -lcore19 -lsnls19 -lnls19 -lxml19 -lcore19 -lunls19 -lsnls19 -lnls19 -lcore19 -lnls19 -lasmclnt19 -lcommon19 -lcore19 -ledtn19 -laio -lons -lmql1 -lipc1 -lfthread19 -ldl -lm -lpthread -lnsl -lirc -limf -lirc -lrt -laio -lresolv -lsvml -Wl,-rpath,/u01/app/19.7.0/grid/lib -lm -ldl -lm -lpthread -lnsl -lirc -limf -lirc -lrt -laio -lresolv -lsvml -ldl -lm -L/u01/app/19.7.0/grid/lib

oracle 19252 10982 0 12:06 pts/2 00:00:00 grep --color=auto d.bin

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ ps -ef | grep d.bin

oracle 2401 2388 0 11:54 pts/1 00:00:00 /u01/app/19.7.0/grid/perl/bin/perl -I/u01/app/19.7.0/grid/perl/lib -I/u01/app/19.7.0/grid/bin /u01/app/19.7.0/grid/bin/gridSetup.pl -J-DCVU_OS_SETTINGS=SHELL_NOFILE_SOFT_LIMIT:1024,SHELL_STACK_SOFT_LIMIT:10240,SHELL_UMASK:0022 -SwitchGridhome

root 10768 1 0 Jul31 ? 00:05:29 /u01/app/19.3.0/grid/bin/ohasd.bin reboot BLOCKING_STACK_LOCALE_OHAS=AMERICAN_AMERICA.AL32UTF8

root 10871 1 0 Jul31 ? 00:02:03 /u01/app/19.3.0/grid/bin/orarootagent.bin

oracle 10956 1 0 Jul31 ? 00:03:10 /u01/app/19.3.0/grid/bin/oraagent.bin

oracle 10983 1 0 Jul31 ? 00:01:07 /u01/app/19.3.0/grid/bin/mdnsd.bin

oracle 10985 1 0 Jul31 ? 00:02:41 /u01/app/19.3.0/grid/bin/evmd.bin

oracle 11017 1 0 Jul31 ? 00:01:11 /u01/app/19.3.0/grid/bin/gpnpd.bin

oracle 11070 10985 0 Jul31 ? 00:01:00 /u01/app/19.3.0/grid/bin/evmlogger.bin -o /u01/app/19.3.0/grid/log/[HOSTNAME]/evmd/evmlogger.info -l /u01/app/19.3.0/grid/log/[HOSTNAME]/evmd/evmlogger.log

oracle 11087 1 0 Jul31 ? 00:02:30 /u01/app/19.3.0/grid/bin/gipcd.bin

root 11135 1 0 Jul31 ? 00:01:18 /u01/app/19.3.0/grid/bin/cssdmonitor

root 11138 1 1 Jul31 ? 00:08:47 /u01/app/19.3.0/grid/bin/osysmond.bin

root 11168 1 0 Jul31 ? 00:01:30 /u01/app/19.3.0/grid/bin/cssdagent

oracle 11186 1 0 Jul31 ? 00:05:15 /u01/app/19.3.0/grid/bin/ocssd.bin -S 1

root 11788 1 1 Jul31 ? 00:09:23 /u01/app/19.3.0/grid/bin/ologgerd -M

root 11856 1 0 Jul31 ? 00:02:47 /u01/app/19.3.0/grid/bin/octssd.bin reboot

root 12409 1 0 Jul31 ? 00:05:50 /u01/app/19.3.0/grid/bin/crsd.bin reboot

root 13478 1 1 Jul31 ? 00:08:33 /u01/app/19.3.0/grid/bin/orarootagent.bin

oracle 13491 1 1 Jul31 ? 00:08:48 /u01/app/19.3.0/grid/bin/oraagent.bin

oracle 13529 1 0 Jul31 ? 00:00:09 /u01/app/19.3.0/grid/bin/tnslsnr ASMNET1LSNR_ASM -no_crs_notify -inherit

root 13965 11138 0 Jul31 ? 00:00:42 /u01/app/19.3.0/grid/perl/bin/perl /u01/app/19.3.0/grid/bin/diagsnap.pl start

oracle 14621 1 0 Jul31 ? 00:00:00 /u01/app/19.3.0/grid/bin/tnslsnr LISTENER_SCAN2 -no_crs_notify -inherit

oracle 14665 1 0 Jul31 ? 00:00:00 /u01/app/19.3.0/grid/bin/tnslsnr LISTENER_SCAN3 -no_crs_notify -inherit

oracle 14748 1 0 Jul31 ? 00:01:12 /u01/app/19.3.0/grid/bin/scriptagent.bin

oracle 16894 1 0 Jul31 ? 00:00:00 /u01/app/19.3.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit

oracle 17344 1 0 00:16 ? 00:00:02 /u01/app/19.3.0/grid/bin/tnslsnr MGMTLSNR -no_crs_notify -inherit

root 19555 19344 0 12:06 pts/3 00:00:00 sh -c /bin/su oracle -c ' echo CLSRSC_START; /u01/app/19.3.0/grid/bin/cluutil -ckpt -global -oraclebase /u01/app/oracle -writeckpt -name ROOTCRS_PATCHINFO -pname FIRSTNODE_TOPATCH -pvalue rac1-oel77-122 -nodelist rac1-oel77-122,rac2-oel77-123 ' 2>&1

root 19556 19555 0 12:06 pts/3 00:00:00 /bin/su oracle -c echo CLSRSC_START; /u01/app/19.3.0/grid/bin/cluutil -ckpt -global -oraclebase /u01/app/oracle -writeckpt -name ROOTCRS_PATCHINFO -pname FIRSTNODE_TOPATCH -pvalue rac1-oel77-122 -nodelist rac1-oel77-122,rac2-oel77-123

oracle 19557 19556 0 12:06 ? 00:00:00 bash -c echo CLSRSC_START; /u01/app/19.3.0/grid/bin/cluutil -ckpt -global -oraclebase /u01/app/oracle -writeckpt -name ROOTCRS_PATCHINFO -pname FIRSTNODE_TOPATCH -pvalue rac1-oel77-122 -nodelist rac1-oel77-122,rac2-oel77-123

oracle 19558 19557 0 12:06 ? 00:00:00 /bin/sh /u01/app/19.3.0/grid/bin/cluutil -ckpt -global -oraclebase /u01/app/oracle -writeckpt -name ROOTCRS_PATCHINFO -pname FIRSTNODE_TOPATCH -pvalue rac1-oel77-122 -nodelist rac1-oel77-122,rac2-oel77-123

oracle 19574 10982 0 12:06 pts/2 00:00:00 grep --color=auto d.bin

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 12184 1 0 Jul31 ? 00:00:01 asm_pmon_+ASM1

oracle 15010 1 0 01:25 ? 00:00:01 ora_pmon_orcldb1

oracle 17470 1 0 00:16 ? 00:00:01 mdb_pmon_-MGMTDB

oracle 22177 10982 0 12:07 pts/2 00:00:00 grep --color=auto pmon

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 12184 1 0 Jul31 ? 00:00:01 asm_pmon_+ASM1

oracle 15010 1 0 01:25 ? 00:00:01 ora_pmon_orcldb1

oracle 17470 1 0 00:16 ? 00:00:01 mdb_pmon_-MGMTDB

oracle 22328 10982 0 12:07 pts/2 00:00:00 grep --color=auto pmon

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 12184 1 0 Jul31 ? 00:00:01 asm_pmon_+ASM1

oracle 15010 1 0 01:25 ? 00:00:01 ora_pmon_orcldb1 - - > MGMTDB relocated

oracle 22507 10982 0 12:08 pts/2 00:00:00 grep --color=auto pmon

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 12184 1 0 Jul31 ? 00:00:01 asm_pmon_+ASM1

oracle 15010 1 0 01:25 ? 00:00:01 ora_pmon_orcldb1

oracle 24709 10982 0 12:11 pts/2 00:00:00 grep --color=auto pmon

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 12184 1 0 Jul31 ? 00:00:01 asm_pmon_+ASM1

oracle 15010 1 0 01:25 ? 00:00:01 ora_pmon_orcldb1

oracle 24823 10982 0 12:11 pts/2 00:00:00 grep --color=auto pmon

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 15010 1 0 01:25 ? 00:00:02 ora_pmon_orcldb1 ========> +ASM1 instance is down

oracle 26086 10982 0 12:12 pts/2 00:00:00 grep --color=auto pmon

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 15010 1 0 01:25 ? 00:00:02 ora_pmon_orcldb1 ========> Database instance (orcldb1)is up and running.

oracle 26150 10982 0 12:12 pts/2 00:00:00 grep --color=auto pmon

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 15010 1 0 01:25 ? 00:00:02 ora_pmon_orcldb1 ========> Database instance (orcldb1)is up and running.

oracle 26273 10982 0 12:12 pts/2 00:00:00 grep --color=auto pmon

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 15010 1 0 01:25 ? 00:00:02 ora_pmon_orcldb1 ========> Database instance (orcldb1)is up and running.

oracle 26872 10982 0 12:12 pts/2 00:00:00 grep --color=auto pmon

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 15010 1 0 01:25 ? 00:00:02 ora_pmon_orcldb1 ========> Database instance (orcldb1)is up and running.

oracle 27189 10982 0 12:12 pts/2 00:00:00 grep --color=auto pmon

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 15010 1 0 01:25 ? 00:00:02 ora_pmon_orcldb1 ========> Database instance (orcldb1)is up and running.

oracle 27241 1 0 12:12 ? 00:00:00 asm_pmon_+ASM1 ========> +ASM1 instance is down

oracle 28643 10982 0 12:13 pts/2 00:00:00 grep --color=auto pmon

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ ps -ef | grep d.bin

oracle 2401 2388 0 11:54 pts/1 00:00:00 /u01/app/19.7.0/grid/perl/bin/perl -I/u01/app/19.7.0/grid/perl/lib -I/u01/app/19.7.0/grid/bin /u01/app/19.7.0/grid/bin/gridSetup.pl -J-DCVU_OS_SETTINGS=SHELL_NOFILE_SOFT_LIMIT:1024,SHELL_STACK_SOFT_LIMIT:10240,SHELL_UMASK:0022 -SwitchGridhome

root 25663 1 4 12:12 ? 00:00:03 /u01/app/19.7.0/grid/bin/ohasd.bin reboot CRS_AUX_DATA=CRS_AUXD_TGIP=yes;_ORA_BLOCKING_STACK_LOCALE=AMERICAN_AMERICA.AL32UTF8

oracle 25750 1 0 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/oraagent.bin

oracle 25773 1 0 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/gpnpd.bin

oracle 25775 1 0 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/mdnsd.bin

oracle 25777 1 2 12:12 ? 00:00:01 /u01/app/19.7.0/grid/bin/evmd.bin

root 25837 1 1 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/orarootagent.bin

oracle 25879 25777 0 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/evmlogger.bin -o /u01/app/19.7.0/grid/log/[HOSTNAME]/evmd/evmlogger.info -l /u01/app/19.7.0/grid/log/[HOSTNAME]/evmd/evmlogger.log

oracle 25918 1 1 12:12 ? 00:00:01 /u01/app/19.7.0/grid/bin/gipcd.bin

root 25948 1 0 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/cssdagent

oracle 25965 1 3 12:12 ? 00:00:02 /u01/app/19.7.0/grid/bin/ocssd.bin -P

root 26118 1 0 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/octssd.bin reboot

root 26168 1 4 12:12 ? 00:00:02 /u01/app/19.7.0/grid/bin/crsd.bin reboot

root 26184 1 0 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/cssdmonitor

root 26186 1 2 12:12 ? 00:00:01 /u01/app/19.7.0/grid/bin/osysmond.bin

oracle 26600 1 2 12:12 ? 00:00:01 /u01/app/19.7.0/grid/bin/oraagent.bin

root 26610 1 1 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/orarootagent.bin

oracle 26798 1 0 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit

oracle 26938 1 0 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/tnslsnr ASMNET1LSNR_ASM -no_crs_notify -inherit

oracle 26961 1 0 12:12 ? 00:00:00 /u01/app/19.7.0/grid/bin/tnslsnr LISTENER_SCAN1 -no_crs_notify -inherit

root 28287 1 8 12:13 ? 00:00:01 /u01/app/19.7.0/grid/bin/ologgerd -M

oracle 28659 10982 0 12:13 pts/2 00:00:00 grep --color=auto d.bin

[oracle@rac1-oel77-122 ~]$

Executing 'root.sh' in rac2-oel77-123

======================================

[oracle@rac2-oel77-123 ~]$ su - root

Password:

[root@rac2-oel77-123 ~]# /u01/app/19.7.0/grid/root.sh -transparent -nodriverupdate

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/19.7.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

LD_LIBRARY_PATH='/u01/app/19.3.0/grid/lib:/u01/app/19.7.0/grid/lib:'

Using configuration parameter file: /u01/app/19.7.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/oracle/crsdata/rac2-oel77-123/crsconfig/rootcrs_rac2-oel77-123_2020-08-01_12-15-34AM.log

Using configuration parameter file: /u01/app/19.7.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/oracle/crsdata/rac2-oel77-123/crsconfig/rootcrs_rac2-oel77-123_2020-08-01_12-15-35AM.log

Using configuration parameter file: /u01/app/19.7.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/oracle/crsdata/rac2-oel77-123/crsconfig/crs_prepatch_rac2-oel77-123_2020-08-01_12-15-35AM.log

Using configuration parameter file: /u01/app/19.7.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/oracle/crsdata/rac2-oel77-123/crsconfig/crs_prepatch_rac2-oel77-123_2020-08-01_12-15-36AM.log

2020/08/01 12:15:45 CLSRSC-347: Successfully unlock /u01/app/19.7.0/grid

2020/08/01 12:15:46 CLSRSC-671: Pre-patch steps for patching GI home successfully completed.

Using configuration parameter file: /u01/app/19.7.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/oracle/crsdata/rac2-oel77-123/crsconfig/crs_postpatch_rac2-oel77-123_2020-08-01_12-15-47AM.log

Oracle Clusterware active version on the cluster is [19.0.0.0.0]. The cluster upgrade state is [ROLLING PATCH]. The cluster active patch level is [2701864972].

2020/08/01 12:16:04 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd_dummy.service'

2020/08/01 12:18:35 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.service'

Oracle Clusterware active version on the cluster is [19.0.0.0.0]. The cluster upgrade state is [NORMAL]. The cluster active patch level is [3633918477].

SQL Patching tool version 19.7.0.0.0 Production on Sat Aug 1 12:21:24 2020

Copyright (c) 2012, 2020, Oracle. All rights reserved.

Log file for this invocation: /u01/app/oracle/cfgtoollogs/sqlpatch/sqlpatch_12810_2020_08_01_12_21_24/sqlpatch_invocation.log

Connecting to database...OK

Gathering database info...done

Note: Datapatch will only apply or rollback SQL fixes for PDBs

that are in an open state, no patches will be applied to closed PDBs.

Please refer to Note: Datapatch: Database 12c Post Patch SQL Automation

(Doc ID 1585822.1)

Bootstrapping registry and package to current versions...done

Determining current state...done

Current state of interim SQL patches:

No interim patches found

Current state of release update SQL patches:

Binary registry:

19.7.0.0.0 Release_Update 200404035018: Installed

PDB CDB$ROOT:

Applied 19.6.0.0.0 Release_Update 191217155004 successfully on 01-AUG-20 12.06.56.742690 AM

PDB GIMR_DSCREP_10:

Applied 19.6.0.0.0 Release_Update 191217155004 successfully on 01-AUG-20 12.15.22.558687 AM

PDB PDB$SEED:

Applied 19.6.0.0.0 Release_Update 191217155004 successfully on 01-AUG-20 12.15.22.558687 AM

Adding patches to installation queue and performing prereq checks...done

Installation queue:

For the following PDBs: CDB$ROOT PDB$SEED GIMR_DSCREP_10

No interim patches need to be rolled back

Patch 30869156 (Database Release Update : 19.7.0.0.200414 (30869156)):

Apply from 19.6.0.0.0 Release_Update 191217155004 to 19.7.0.0.0 Release_Update 200404035018

No interim patches need to be applied

Installing patches...

Patch installation complete. Total patches installed: 3

Validating logfiles...done

Patch 30869156 apply (pdb CDB$ROOT): SUCCESS

logfile: /u01/app/oracle/cfgtoollogs/sqlpatch/30869156/23493838/30869156_apply__MGMTDB_CDBROOT_2020Aug01_12_22_52.log (no errors)

Patch 30869156 apply (pdb PDB$SEED): SUCCESS

logfile: /u01/app/oracle/cfgtoollogs/sqlpatch/30869156/23493838/30869156_apply__MGMTDB_PDBSEED_2020Aug01_12_24_25.log (no errors)

Patch 30869156 apply (pdb GIMR_DSCREP_10): SUCCESS

logfile: /u01/app/oracle/cfgtoollogs/sqlpatch/30869156/23493838/30869156_apply__MGMTDB_GIMR_DSCREP_10_2020Aug01_12_24_25.log (no errors)

SQL Patching tool complete on Sat Aug 1 12:26:27 2020

2020/08/01 12:27:25 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector.

2020/08/01 12:27:31 CLSRSC-672: Post-patch steps for patching GI home successfully completed.

[root@rac2-oel77-123 ~]#

Note: Check the status "Database Instance (orcldb2)"

Check the status from NEW GRID HOME (/u01/app/19.7.0/grid)

==========================================================

[oracle@rac1-oel77-122 ~]$ ps -ef | grep pmon

oracle 2216 1 0 13:05 ? 00:00:00 ora_pmon_orcldb1

oracle 2344 1 0 13:05 ? 00:00:00 mdb_pmon_-MGMTDB

oracle 5621 3763 0 13:06 pts/1 00:00:00 grep --color=auto pmon

oracle 32375 1 0 13:05 ? 00:00:00 asm_pmon_+ASM1

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ grid_env

[oracle@rac1-oel77-122 ~]$ cd $ORACLE_HOME/OPatch

[oracle@rac1-oel77-122 OPatch]$ ./opatch lspatches

30898856;TOMCAT RELEASE UPDATE 19.0.0.0.0 (30898856)

30894985;OCW RELEASE UPDATE 19.7.0.0.0 (30894985)

30869304;ACFS RELEASE UPDATE 19.7.0.0.0 (30869304)

30869156;Database Release Update : 19.7.0.0.200414 (30869156)

OPatch succeeded.

[oracle@rac1-oel77-122 OPatch]$

Stop and Start the cluster from NEW GRID HOME (/u01/app/19.7.0/grid) to check if any issues

===========================================================================================

Note: This is for just to make sure everything is working fine.

[root@rac1-oel77-122 ~]# ./grid_env

[root@rac1-oel77-122 ~]# . oraenv

ORACLE_SID = [root] ? +ASM1

ORACLE_HOME = [/home/oracle] ? /u01/app/19.7.0/grid

The Oracle base has been set to /u01/app/oracle

[root@rac1-oel77-122 ~]#

[root@rac1-oel77-122 ~]# crsctl stop cluster -all

CRS-2673: Attempting to stop 'ora.crsd' on 'rac2-oel77-123'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on server 'rac2-oel77-123'

CRS-2673: Attempting to stop 'ora.crsd' on 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.orcldb.db' on 'rac2-oel77-123'

CRS-2673: Attempting to stop 'ora.chad' on 'rac2-oel77-123'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on server 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.qosmserver' on 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.orcldb.db' on 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.chad' on 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.mgmtdb' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.orcldb.db' on 'rac2-oel77-123' succeeded

CRS-33673: Attempting to stop resource group 'ora.asmgroup' on server 'rac2-oel77-123'

CRS-2673: Attempting to stop 'ora.DATADG.dg' on 'rac2-oel77-123'

CRS-2673: Attempting to stop 'ora.OCRVD.dg' on 'rac2-oel77-123'

CRS-2673: Attempting to stop 'ora.RECODG.dg' on 'rac2-oel77-123'

CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'rac2-oel77-123'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN1.lsnr' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.DATADG.dg' on 'rac2-oel77-123' succeeded

CRS-2677: Stop of 'ora.OCRVD.dg' on 'rac2-oel77-123' succeeded

CRS-2677: Stop of 'ora.RECODG.dg' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.LISTENER_SCAN1.lsnr' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.scan1.vip' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.rac2-oel77-123.vip' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.scan1.vip' on 'rac2-oel77-123' succeeded

CRS-2677: Stop of 'ora.rac2-oel77-123.vip' on 'rac2-oel77-123' succeeded

CRS-2677: Stop of 'ora.chad' on 'rac1-oel77-122' succeeded

CRS-2677: Stop of 'ora.orcldb.db' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN2.lsnr' on 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN3.lsnr' on 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.cvu' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'rac1-oel77-122' succeeded

CRS-2677: Stop of 'ora.LISTENER_SCAN3.lsnr' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.scan3.vip' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.LISTENER_SCAN2.lsnr' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.scan2.vip' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.asm' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.ASMNET1LSNR_ASM.lsnr' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.cvu' on 'rac1-oel77-122' succeeded

CRS-2677: Stop of 'ora.scan3.vip' on 'rac1-oel77-122' succeeded

CRS-2677: Stop of 'ora.scan2.vip' on 'rac1-oel77-122' succeeded

CRS-2677: Stop of 'ora.qosmserver' on 'rac1-oel77-122' succeeded

CRS-2677: Stop of 'ora.ASMNET1LSNR_ASM.lsnr' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.asmnet1.asmnetwork' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.asmnet1.asmnetwork' on 'rac2-oel77-123' succeeded

CRS-33677: Stop of resource group 'ora.asmgroup' on server 'rac2-oel77-123' succeeded.

CRS-2677: Stop of 'ora.chad' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.ons' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.ons' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.net1.network' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.net1.network' on 'rac2-oel77-123' succeeded

CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'rac2-oel77-123' has completed

CRS-2677: Stop of 'ora.crsd' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.storage' on 'rac2-oel77-123'

CRS-2673: Attempting to stop 'ora.evmd' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.storage' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.evmd' on 'rac2-oel77-123' succeeded

CRS-2677: Stop of 'ora.asm' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.ctssd' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.ctssd' on 'rac2-oel77-123' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'rac2-oel77-123'

CRS-2677: Stop of 'ora.mgmtdb' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.MGMTLSNR' on 'rac1-oel77-122'

CRS-33673: Attempting to stop resource group 'ora.asmgroup' on server 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.OCRVD.dg' on 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.DATADG.dg' on 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.RECODG.dg' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.OCRVD.dg' on 'rac1-oel77-122' succeeded

CRS-2677: Stop of 'ora.DATADG.dg' on 'rac1-oel77-122' succeeded

CRS-2677: Stop of 'ora.RECODG.dg' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.asm' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.ASMNET1LSNR_ASM.lsnr' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.cssd' on 'rac2-oel77-123' succeeded

CRS-2677: Stop of 'ora.ASMNET1LSNR_ASM.lsnr' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.asmnet1.asmnetwork' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.asmnet1.asmnetwork' on 'rac1-oel77-122' succeeded

CRS-33677: Stop of resource group 'ora.asmgroup' on server 'rac1-oel77-122' succeeded.

CRS-2677: Stop of 'ora.MGMTLSNR' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.rac1-oel77-122.vip' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.rac1-oel77-122.vip' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.ons' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.ons' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.net1.network' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.net1.network' on 'rac1-oel77-122' succeeded

CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'rac1-oel77-122' has completed

CRS-2677: Stop of 'ora.crsd' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.storage' on 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.evmd' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.storage' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.evmd' on 'rac1-oel77-122' succeeded

CRS-2677: Stop of 'ora.asm' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.ctssd' on 'rac1-oel77-122'

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.ctssd' on 'rac1-oel77-122' succeeded

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'rac1-oel77-122' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'rac1-oel77-122'

CRS-2677: Stop of 'ora.cssd' on 'rac1-oel77-122' succeeded

[root@rac1-oel77-122 ~]#

[root@rac1-oel77-122 ~]# crsctl start cluster -all

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1-oel77-122'

CRS-2672: Attempting to start 'ora.evmd' on 'rac2-oel77-123'

CRS-2672: Attempting to start 'ora.evmd' on 'rac1-oel77-122'

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac2-oel77-123'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac1-oel77-122' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac1-oel77-122'

CRS-2672: Attempting to start 'ora.diskmon' on 'rac1-oel77-122'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac2-oel77-123' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac2-oel77-123'

CRS-2676: Start of 'ora.diskmon' on 'rac1-oel77-122' succeeded

CRS-2672: Attempting to start 'ora.diskmon' on 'rac2-oel77-123'

CRS-2676: Start of 'ora.diskmon' on 'rac2-oel77-123' succeeded

CRS-2676: Start of 'ora.evmd' on 'rac1-oel77-122' succeeded

CRS-2676: Start of 'ora.evmd' on 'rac2-oel77-123' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac2-oel77-123' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac1-oel77-122' succeeded

CRS-2672: Attempting to start 'ora.ctssd' on 'rac1-oel77-122'

CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'rac1-oel77-122'

CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'rac2-oel77-123'

CRS-2672: Attempting to start 'ora.ctssd' on 'rac2-oel77-123'

CRS-2676: Start of 'ora.ctssd' on 'rac1-oel77-122' succeeded

CRS-2676: Start of 'ora.ctssd' on 'rac2-oel77-123' succeeded

CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'rac1-oel77-122' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'rac1-oel77-122'

CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'rac2-oel77-123' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'rac2-oel77-123'

CRS-2676: Start of 'ora.asm' on 'rac2-oel77-123' succeeded

CRS-2672: Attempting to start 'ora.storage' on 'rac2-oel77-123'

CRS-2676: Start of 'ora.asm' on 'rac1-oel77-122' succeeded

CRS-2672: Attempting to start 'ora.storage' on 'rac1-oel77-122'

CRS-2676: Start of 'ora.storage' on 'rac1-oel77-122' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'rac1-oel77-122'

CRS-2676: Start of 'ora.crsd' on 'rac1-oel77-122' succeeded

CRS-2676: Start of 'ora.storage' on 'rac2-oel77-123' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'rac2-oel77-123'

CRS-2676: Start of 'ora.crsd' on 'rac2-oel77-123' succeeded

[root@rac1-oel77-122 ~]# exit

logout

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ grid_env

[oracle@rac1-oel77-122 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE rac1-oel77-122 STABLE

ONLINE ONLINE rac2-oel77-123 STABLE

ora.chad

ONLINE ONLINE rac1-oel77-122 STABLE

ONLINE ONLINE rac2-oel77-123 STABLE

ora.net1.network

ONLINE ONLINE rac1-oel77-122 STABLE

ONLINE ONLINE rac2-oel77-123 STABLE

ora.ons

ONLINE ONLINE rac1-oel77-122 STABLE

ONLINE ONLINE rac2-oel77-123 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 STABLE

2 ONLINE ONLINE rac2-oel77-123 STABLE

3 ONLINE OFFLINE STABLE

ora.DATADG.dg(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 STABLE

2 ONLINE ONLINE rac2-oel77-123 STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac2-oel77-123 STABLE

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.MGMTLSNR

1 ONLINE ONLINE rac1-oel77-122 169.254.18.68 10.1.4

.122,STABLE

ora.OCRVD.dg(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 STABLE

2 ONLINE ONLINE rac2-oel77-123 STABLE

3 OFFLINE OFFLINE STABLE

ora.RECODG.dg(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 STABLE

2 ONLINE ONLINE rac2-oel77-123 STABLE

3 OFFLINE OFFLINE STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 Started,STABLE

2 ONLINE ONLINE rac2-oel77-123 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE rac1-oel77-122 STABLE

2 ONLINE ONLINE rac2-oel77-123 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.mgmtdb

1 ONLINE ONLINE rac1-oel77-122 Open,STABLE

ora.orcldb.db

1 ONLINE ONLINE rac1-oel77-122 Open,HOME=/u01/app/o

racle/product/19.3.0

/db_1,STABLE

2 ONLINE ONLINE rac2-oel77-123 Open,HOME=/u01/app/o

racle/product/19.3.0

/db_1,STABLE

ora.qosmserver

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.rac1-oel77-122.vip

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.rac2-oel77-123.vip

1 ONLINE ONLINE rac2-oel77-123 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac2-oel77-123 STABLE

ora.scan2.vip

1 ONLINE ONLINE rac1-oel77-122 STABLE

ora.scan3.vip

1 ONLINE ONLINE rac1-oel77-122 STABLE

--------------------------------------------------------------------------------

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ db_env

[oracle@rac1-oel77-122 ~]$ srvctl status database -d orcldb

Instance orcldb1 is running on node rac1-oel77-122

Instance orcldb2 is running on node rac2-oel77-123

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ grid_env

[oracle@rac1-oel77-122 ~]$ sqlplus / as sysasm

SQL*Plus: Release 19.0.0.0.0 - Production on Sat Aug 1 15:14:02 2020

Version 19.7.0.0.0

Copyright (c) 1982, 2019, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.7.0.0.0

SQL> select instance_name,instance_number from gv$instance;

INSTANCE_NAME INSTANCE_NUMBER

---------------- ---------------

+ASM2 2

+ASM1 1

SQL> exit

Disconnected from Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.7.0.0.0

[oracle@rac1-oel77-122 ~]$

[oracle@rac1-oel77-122 ~]$ db_env

[oracle@rac1-oel77-122 ~]$ sqlplus / as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Sat Aug 1 15:14:36 2020

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.6.0.0.0

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB1 READ WRITE NO

4 PDB2 READ WRITE NO

SQL> select instance_name,instance_number from gv$instance;

INSTANCE_NAME INSTANCE_NUMBER

---------------- ---------------

orcldb1 1

orcldb2 2

SQL>Note: ACFS Drivers:

===================

Using Oracle Zero Downtime Patching with ACFS:

When using Oracle Zero-Downtime Patching, the opatch inventory will display the

new patch number, however, only the Oracle Grid Infrastructure user space binaries are actually patched;

Oracle Grid Infrastructure OS kernel modules such as ACFS are not updated but will continue to run the pre-patch version.

The updated ACFS drivers will be automatically uploaded if the nodes in the cluster

are rebooted (e.g. to install an OS update). Otherwise to upload the updated ACFS drivers the user must stop the

crs stack, run `root.sh -updateosfiles`, and restart the crs stack - on each node of the cluster;

to subsequently verify the installed and running driver versions run

'crsctl query driver softwareversion' and 'crsctl query driver activeversion'' on each node of the cluster.

Hope it helps......If any improvements please let me know. This post is based on my environment and

Knowledge.